Event Supervisor Review

Hi everyone!

My name is

Jaime Yu, and this is my first ever event-supervisor review post, so let us kick it off strong. I had the honor of being a

co-tournament director at Lexington Invitational as well as being an event supervisor for several events.

- Co-event supervisor for Dynamic Planet along with David Zhou and Daniel Zhang. Test reviewed by Benny Ehlert (BennyTheJett)

- Co-event supervisor for Meteorology along with David Zhou, Daniel Zhang and Evan Xiang (Banana2020). Test reviewed by Gwennie Liu.

- Co-event supervisor for Fossils along with David Zhou and Daniel Zhang. Test reviewed by Benny Ehlert (BennyTheJett)

- Co-event supervisor (more like minimal-question contributor) for Ornithology along with Kayla Yao (ArchdragoonKaela) and Robin Pan

Thank you to everyone that attended our competition - we truly appreciate each and every one of you. Some of these tests were very hard, and I hope you learned a lot from them!

Dynamic Planet

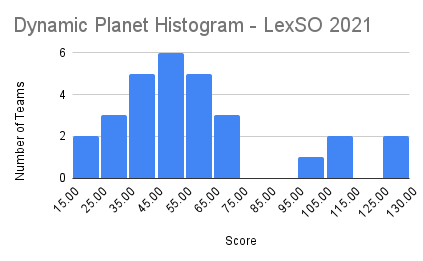

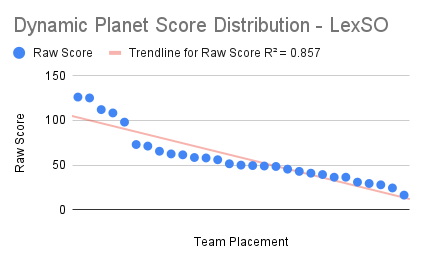

Statistics and Graphs:

Mean: 58.47

Median: 50

Standard Deviation: 28.9

Max: 126

Total Possible Points: 198

Average number of correct Answers/Question: 11.06 (total 29 teams)

Thoughts:

Thoughts:

Congratulations to Beckendorff Junior High school for winning this event!

General Organization: Our test was organized by topic and then somewhat by difficulty with each “topic” grading from easy to hard. I really enjoyed this method of organization used at MIT Invitational, so I decided to implement it in our test.

Thoughts by section:

General: this was short, designed as a warm up, all MC. Students generally did very well on this, as expected

Chemical: it started ramping up a little bit after a quick-fire MC section at the beginning. Students seemed to have trouble with the sediment section overall and it seems like a lot of dp-students lack an in-depth knowledge of different sediment types. Surprisingly, only one school attempted the residence time question which was fairly straightforward and honestly a fun question. Looking back, maybe I should have made it worth more points. There was 1 correct answer - I am very proud of that team for pushing through. Generally though, students did fairly well on this section as each question had an average of about 14 correct answers/questions.

Physical: This section was divided into several parts, with a “math” section at the end. It was also the longest section by far. Teams struggled with application questions in general throughout this section - any question that required explaining remained mostly untouched. A lot of teams skipped the math section - I encourage you to try it when you have time. It is not as scary as it looks, I promise. This section was a bit harder, with about 10 correct answers/questions.

Geology: After somewhat of a typical start, it transitioned into a fairly atypical geology section, written mostly by Daniel which required a lot of critical thinking and some primitive math reading. Students generally struggled with this section, as I had expected, with an average of 8 correct answers/questions. However, some students came to me after asking for ways to improve - great job reaching out if you are reading this!

General Thoughts: Overall, I think this test offered fairly good separation of teams and we had nearly a Bell Curve with some outlying teams. I think I added a decent amount of easier “filler” questions. However, in hindsight some questions such as the entertainment one were far above a middle school level, and I should have made them simpler and more approachable.

Feedback Form and Test Release:

Folder with tests:

https://drive.google.com/drive/folders/ ... sp=sharing

Feedback form (PLEASE FILL OUT THANKS!)

https://forms.gle/3vZDXHBSyZxLQ7QJ9

Fossils

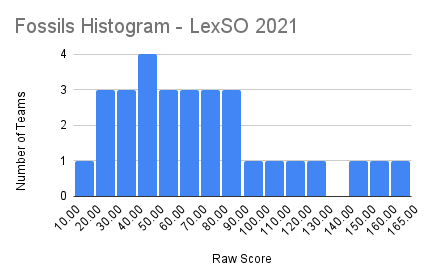

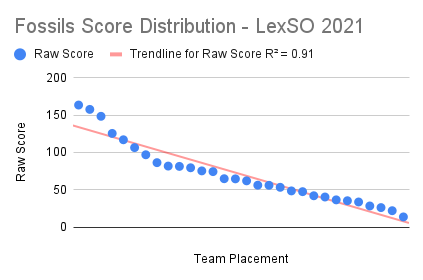

Statistics and Graphs:

Mean: 72.64

Median: 64.49

Standard Deviation: 39.48

Max: 163.33

Total Possible Points: 271.5

Average number of correct Answers/Question: 5.74 (total 30 teams)

Thoughts

Thoughts

Congratulations to Jeffrey Trail Middle School for winning this event!

General Organization: We wrote this test with the intention to cover all the topics on the fossils list to provide students with the best preparation for nationals and so students can figure out what topics they need to work on. As such, we wrote the test following the order of the list. There was some concern over whether or not this would help with ID but I do not think it really made a difference - if you couldn’t tell a fish from a coral, mixing up the order probably wouldn’t have harmed you too much. The first 9 sections were in an image-of-fossil then questions-about-them, while the last 3 covered all the general points. The test was very long as a result, and we were not expecting most teams to finish completely. A separate image sheet was provided as well as embedded images in an attempt to mitigate the scrolling issue. There were no complaints, so I am assuming the issue was successfully mitigated.

Thoughts on the Sections: Too many sections to go one-by-one, so I am going to summarize and pick out highlights.

Identification: I was afraid that ID would be too easy, but that was not the case and feedback I got said that there was a good deal of challenging ID. Thank you so much to Alec Sirek (@alecsiurekphotography) for letting us use some of his photos, and other photos were obtained from god-knows-which page of google and from obscure searches to try to provide new images. Generally, I think we did a good job of this and provided interesting specimens.

Specimen Questions: The questions were mostly pulled from my binder and limited additional research done, as well as background knowledge. We tried to ask about topics we haven’t seen too much to avoid redundancy. However, there was a low number of responses per question, so I believe that we may have made them slightly too hard and should have added in more filler questions. Or it could just be a side effect of people jumping around in a long test. Fossils can easily be a mindless copy-paste from binder event, and I hope that some of our questions required some thinking and putting things into context - what I believe is one of the best parts of fossils.

Station highlights: Stations 5 - 7 (fish through birds) had the lowest Correct Answers per question at around 2.5. These stations were my personal favorite, as they had a focus on evolution and showing change over time (I have studied bird evolution somewhat extensively so I might be biased). Students seemed to have trouble answering some of the questions about the purpose of different morphological elements as well. Station 4 (on molluscs) had the most correct Answers per question at 10, and students found it fairly straightforward as it was primarily pull-from binder/recall.

General Sections: In writing this test, I wanted to go over all the general on the rules, however, I was afraid of making the general section too long. 3/12 stations seemed like a good length though, and most people agreed. Some tests tend to neglect general, and as it's undoubtedly an important part of paleontology I thought it was important to include. The geology section had some good stuff on practical analysis of a rock section, as well as analysis of an image of a rock outcrop. Following was a Lagerstatten/geologic time section - people had little trouble with this. And lastly we had ichnofossils which is a weak point for everybody - me included. Yes, the section is hard, but hopefully everybody learned a bit from it.

General Thoughts: Overall, I am very happy with this test, and I think out of the three main tests I wrote this time, it was probably the best. The score distribution turned out nicely with all the teams spread out, and the top 7 teams separated by about 10 points each. As this was the last Fossils test many kids would be taking in a while and in a way, it ended my Fossils Career - I sincerely hope it was a good one.

Feedback Form and Test Release:

Folder with tests:

https://drive.google.com/drive/folders/ ... sp=sharing

Feedback form (PLEASE FILL OUT THANKS!)

https://forms.gle/UiRJwZ6JcnCs2G287

Meteorology

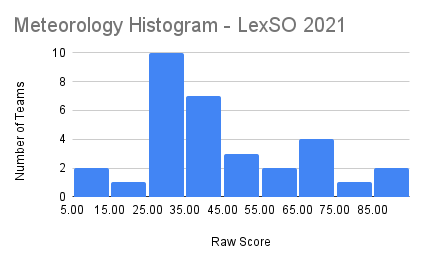

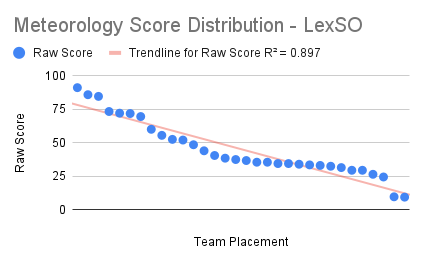

Statistics and Graphs:

Mean: 48.4

Median: 38.5

Standard Deviation: 20.7

Max: 91

Total Possible Points: 164.5

Average number of correct Answers/Question: 9.84 (total 32 teams)

Thoughts

Thoughts

Congratulations to Beckendorff Junior High School for winning this event!

General Organization: In writing this test, I took heavy inspiration from Gwennie and Tim’s tests (Umaroth). This test was organized into sections by topics. We hoped to write a test that encouraged and required critical thinking. To do that, students were asked to do a lot of map-analysis and a supplemental document was provided. Each part mostly graded from less difficult introductory MC questions to harder short answer questions.

Review by Section:

Thunderstorms: Our longest section in terms of questions to cover all of the many thunderstorm related questions. Students seemed to do ok on this section, getting nearly 10 correct answers per question. There were some math calculations on how the storm was moving, which most teams skipped. I thought there was a fair number of points offered per question, it was likely just too intimidating.

Tropical Storms: This appeared to be the easiest section by far, with about 15 correct answers per question. It focused on 1 image and asking detailed questions about the Life Cycle of a typhoon.

Winter Storms: This section was harder for students than I had initially expected, with only 6 correct answers per question on average. Students had a hard time figuring out how the upper level winds played in and analyzing how the storm was strengthened.

Mid-latitude Cyclones: This section had a lot of points but was not too long considering that there were a lot of high-point questions. There was an average of 8.7 correct answers per question. Once again, this was similar to winter storms where students had trouble analyzing the upper level air flow and how it matched up and fed the lower level air flow.

General: Some forecasting and radar stuff that we did not put in anywhere else, and then 2 cloud base calculation questions. Overall, teams did somewhat decently on this section but it was not as many “free” points as we had initially hoped.

General Thoughts: Overall, I am fairly happy with how the score distributions turned out, with the exception of a somewhat large clump of scores near the back. Our general section should have included more easy questions to somewhat pad the harder ones. This test asked a lot about the larger scale and upper-atmospheric features that play into the storms that we see, and it appeared that students in general struggled with it, so I hope this test taught everybody a little.

Feedback Form and Test Release:

Folder with tests:

https://drive.google.com/drive/folders/ ... sp=sharing

Feedback form (PLEASE FILL OUT THANKS!)

https://forms.gle/dnjnDGVLJ1euPbFg8

Ornithology

See co-event supervisor Kayla’s post below

Final Thoughts

Test Difficulty: As an Invitational, our general feedback was that our tests were mostly long and hard - which is what we were going for. For my personal events, the tests were slightly too hard for what I was aiming for and next time I will probably add in some more, easier questions to better separate the lower teams, and to give students more confidence.

As a tournament director, I want to say thank you to everybody who came and made this possible. Thank you all for participating, making our dream come true. We started this season as a school nobody knew, with plans to make a small within-town scrimmage. This invitational has truly become a dream-come-true for all of us, and for that, we have all of you to thank.

Special thanks to all for of our Event Supervisors, especially those from other schools who so graciously offered their time and help when I came asking. These include

ArchdragoonKaela, sciolyperson1, builderguy135, Umaroth, willpan99, Banana2020, and BennyTheJett.

Thank you everybody for making this a fun and enjoyable experience!

Postscript: 2021 words by the way.